Azure Application Gateway

Categories:

15 minute read

Features

- Load balancing HTTP, HTTPS, HTTPS/2, and WebSocket traffic.

- Web application firewall.

- TLS/SSL encryption between users and the AGW.

- TLS/SSL encryption between the AGW and the backend servers.

- Uses round-robin to load balance requests.

- Session affinity (stickiness).

- Autoscaling to dynamically adjust capacity as traffic load changes.

- Connection draining to allow graceful removal of backend pool members during service updates.

Frontend IP

- AGW v1 supports either public, private or both.

- AGW v2 supports only both.

There is a preview release of AGW v2 that allows you to use only a private IP.

Back-end Pool

- Collection of target web servers.

- Supported backend targets

- VM

- VMSS

- Azure App Service

- On-premises VM

- If using TLS/SSL, the back-end pool requires a certificate to authenticate the servers. The gateway re-encrypts the traffic using this certificate before sending the request onto the back-end pool server.

- If using Azure App Service as the back-end, configuring the certificate is not required as Azure manages the App Service, and the gateway automatically trusts it.

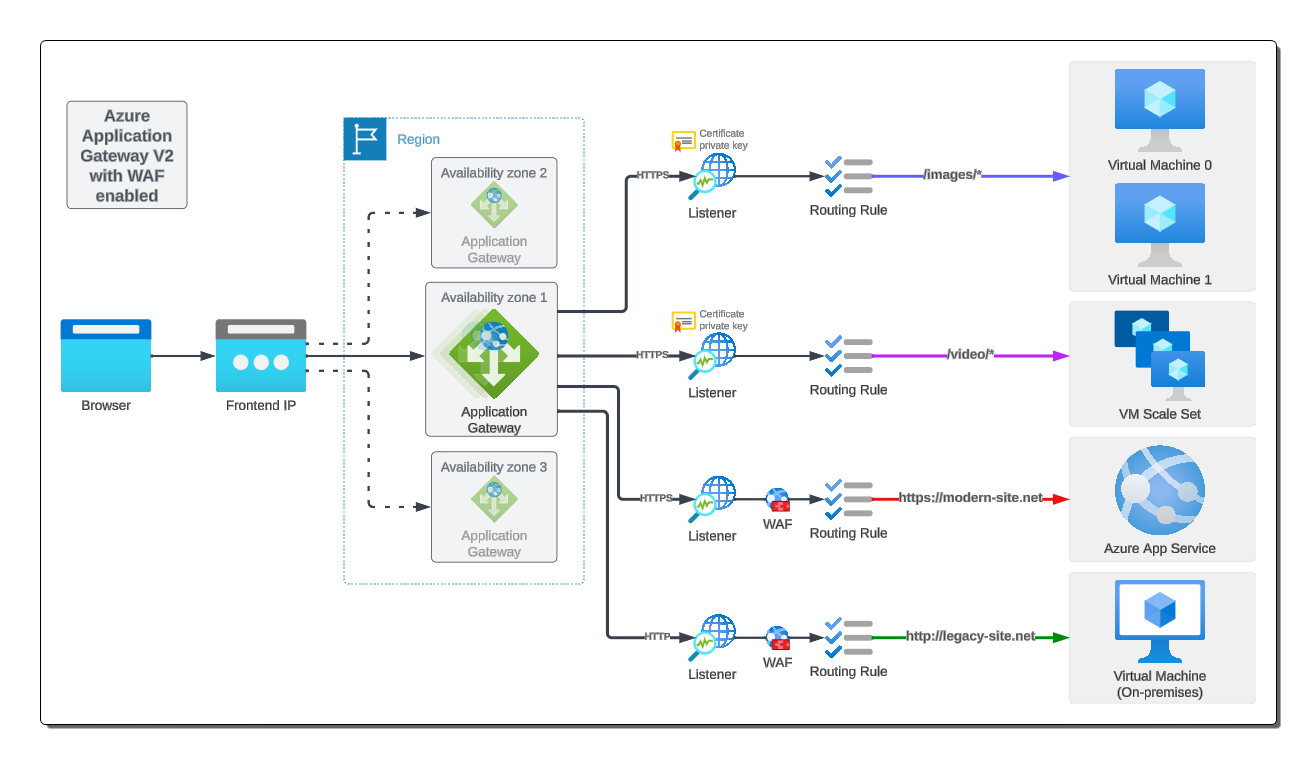

Listeners

- One or more.

- Used to receive incoming requests.

- Traffic is accepted based on a combination of protocol, port, host, and IP address.

- Each listener requires a backend pool and routing rule.

- A listener can be basic or multi-site.

- Basic only routes a request based on the path in the URL.

- Multi-site can route based on the hostname element in the URL

- The listener also handles the TLS/SSL certificates.

Routing Rules

- Binds a listener to a back-end pool.

- Controls how to interpret hostname and path elements of the URL in the request.

- Directs the request to the appropriate back-end pool.

- Controls HTTP settings such as:

- Whether traffic is encrypted or not.

- How traffic is encrypted.

- Also controls:

- Protocol

- Session stickiness

- Connection draining

- Request timeout

- Health probes

Web Application Firewall

In Azure Application Gateway, a Web Application Firewall (WAF) policy is applied at the listener level. This means that you can configure different WAF policies for different listeners within the same Application Gateway.

By associating a WAF policy with a specific listener, you can apply different security configurations and rules based on your requirements. This allows you to have granular control over the security settings for different applications or websites hosted behind the Application Gateway.

For example, suppose you have two listeners in your Application Gateway: Listener A and Listener B. You can assign one WAF policy to Listener A and a different WAF policy to Listener B. This enables you to have separate security configurations for the traffic received by each listener.

It’s important to note that the WAF policy applies to all requests received by the associated listener. So, any requests received by Listener A will be subject to the WAF policy assigned to it, and requests received by Listener B will be subject to its respective WAF policy.

By allowing WAF policies to be set at the listener level, Azure Application Gateway provides flexibility in applying different security configurations for various parts of your application or different websites hosted behind the gateway.

In Azure Application Gateway, the request first hits the listener and then goes through the Web Application Firewall (WAF) if it is enabled.

Here is the sequence of events:

- The request is received by the listener associated with the Azure Application Gateway.

- The listener performs its initial processing, such as protocol termination and SSL decryption (if applicable), and inspects the request headers.

- If a WAF is enabled and associated with the listener, the request is then passed to the WAF for security inspection.

- The WAF analyzes the request against its configured security policies, including rules for common web application vulnerabilities, such as SQL injection or cross-site scripting (XSS).

- If the request passes the WAF’s security checks, it proceeds to the next step in the routing process based on the listener’s configured routing rules.

- If the request is flagged as potentially malicious or violates any defined security policies, the WAF can take actions such as blocking, logging, or modifying the request before further processing.

So, to summarize, the request first hits the listener, which handles initial processing and routing decisions. If a WAF is enabled and associated with the listener, the request then passes through the WAF for security inspection before being forwarded for further processing based on the listener’s routing rules.

- Optional feature.

- Handles incoming requests before it gets to the listener.

- Checks each request based on common threats set by the Open Web Application Security Project (OWASP).

- Supports Core Rule Set (CRS)

- CRS 3.2

- CRS 3.1 (default)

- CRS 3.0

- CRS 2.29

- Protects against following attacks:

- SQL injection.

- Cross-site scripting.

- Command injection.

- HTTP request smuggling.

- Response splitting.

- Remote file inclusion.

- Bot.

- Crawler.

- Scanner.

- HTTP protocol violations and anomalies.

Path-based routing

- Sends requests with different URL paths to different back-end servers.

- A path with /video/* can direct requests to the video server.

- A path with /images/* can direct requests to the image server.

In an Azure Application Gateway, a listener with a path-based target allows you to route incoming requests based on the path of the request URL. When configuring a path-based listener, you specify a path pattern or wildcard value to match against the incoming request paths.

In your example, if you set the path-based target value as “/images/*”, it means that any incoming request with a path starting with “/images/” will be routed to the specified backend pool.

For instance, let’s consider two incoming requests:

Request 1: https://example.com/images/logo.png Request 2: https://example.com/images/gallery/photo.jpg

In this case, both requests have paths starting with “/images/”. The Application Gateway’s path-based listener will match these requests against the configured path pattern ("/images/*") and route them to the associated backend pool.

Path-based listeners are useful when you want to direct specific types of requests (such as image requests in this case) to a dedicated backend pool. This allows you to handle different types of traffic separately and apply specific configurations or optimizations to different parts of your application.

Multi-site routing

- Often used for multi-tenant configurations.

- Allows more than one web application on the same AGW.

- You register multiple DNS (CNAMES) for the front-end IP of the AGW.

- Separate listeners are configured per site (DNS).

- Each listener is matched to separate routing rules.

- Each routing rule and send requests to separate backend pools.

Multi-site routing in Azure Application Gateway allows you to route incoming requests to different backend pools based on the host header or domain name of the request. This feature is useful when you have multiple websites or applications hosted behind the Application Gateway, each with its own domain or subdomain.

With multi-site routing, you can configure multiple listeners, each associated with a specific domain or subdomain. Each listener can have its own routing rules, which define how the incoming requests for that specific domain should be routed to the appropriate backend pool.

For example, let’s say you have two websites: site1.example.com and site2.example.com. You can configure two listeners in the Application Gateway, each associated with one of the websites. The listeners will inspect the host header of the incoming requests and route them accordingly.

Listener 1: Associated with site1.example.com

Routing rule 1: Route requests with host header “site1.example.com” to Backend Pool 1

Listener 2: Associated with site2.example.com

Routing rule 2: Route requests with host header “site2.example.com” to Backend Pool 2

When a request arrives at the Application Gateway, it checks the host header and routes the request to the appropriate backend pool based on the configured routing rules.

This way, you can host multiple websites or applications behind a single Application Gateway and route incoming traffic to different backend pools based on the domain or subdomain of the request.

Redirection

Redirection in Azure Application Gateway allows you to configure rules to redirect incoming requests to a different URL or location. This can be useful for scenarios such as enforcing HTTPS redirection, redirecting from one domain to another, or redirecting specific paths to different destinations.

For example, let’s consider the following scenario:

You have a website called “example.com” and you want to enforce HTTPS redirection, meaning that all incoming requests should be redirected to the HTTPS version of the website. You can achieve this using Azure Application Gateway’s redirection feature.

To configure the redirection, you would set up a listener in the Application Gateway with the HTTP protocol and port 80. Then, you would configure a redirection rule that redirects all HTTP requests to the HTTPS version of the website.

Here is an example configuration:

Listener:

Protocol: HTTP Port: 80

Redirection rule:

Source: http://example.com/* Destination: https://example.com/{path} Redirect type: Permanent (301)

With this configuration, whenever a user accesses http://example.com/some-page, the Application Gateway will redirect them to https://example.com/some-page. The redirection rule ensures that all HTTP requests to the website are automatically redirected to the HTTPS version.

You can create more advanced redirection rules based on your requirements. For instance, you can redirect specific paths or domains to different destinations or even add query string parameters to the redirected URL.

Redirection in Azure Application Gateway allows you to effectively manage URL redirection and ensure that traffic is directed to the desired locations based on your specified rules.

Redundancy

In Azure Application Gateway v2, redundancy is achieved by leveraging the Azure Load Balancer’s internal mechanisms. Application Gateway v2 benefits from the inherent high availability and redundancy features provided by Azure Load Balancer.

Backend Pool Redundancy: Application Gateway v2 allows you to configure multiple backend pools containing the same set of backend instances. These backend instances can be virtual machines, virtual machine scale sets, or Azure App Service instances. By distributing the backend instances across multiple availability zones or fault domains, you can ensure high availability and redundancy for your application backends.

Load Balancing Redundancy: Azure Load Balancer distributes incoming traffic across multiple instances of the Application Gateway to achieve high availability. The load balancer monitors the health of individual Application Gateway instances and automatically routes traffic to healthy instances. This ensures that even if one or more Application Gateway instances become unavailable, traffic will still be directed to the healthy instances.

To provide redundancy for the Application Gateway itself, you can configure it with multiple instances within an Availability Zone or across multiple Availability Zones. Azure Availability Zones are physically separate datacenter locations within an Azure region, each with independent power, cooling, and networking infrastructure. By deploying Application Gateway instances across multiple Availability Zones, you can ensure that even if one zone goes down, the other zone(s) can continue to handle the traffic.

When you configure multiple instances of Application Gateway in an HA configuration, Azure Load Balancer automatically distributes incoming traffic across these instances, ensuring load balancing and failover capabilities. The Load Balancer continuously monitors the health of the Application Gateway instances and directs traffic to healthy instances.

In Azure Application Gateway, the frontend IP is not automatically routed to all the gateways in an HA configuration. Instead, the frontend IP is associated with a specific instance of the Application Gateway.

When you configure an Application Gateway with multiple instances for redundancy, each instance will have its own frontend IP. Each frontend IP is associated with a specific instance of the Application Gateway and is responsible for receiving and processing traffic for that specific instance.

Azure Load Balancer, which sits in front of the Application Gateway instances, distributes incoming traffic across the available instances based on its load balancing algorithm. The Load Balancer acts as a single entry point for external traffic and routes it to the appropriate Application Gateway instance based on factors such as availability and load.

So, while each instance of the Application Gateway has its own frontend IP, the Load Balancer ensures that incoming traffic is distributed among the available instances, allowing for high availability and load balancing across the Application Gateway instances.

It’s important to note that the frontend IP is associated with the Application Gateway itself, not with individual instances, and the Load Balancer handles the routing of traffic to the appropriate instances based on its health and availability.

Availability zones

When you create an Azure Application Gateway v2 and choose Availability Zone 1, 2, and 3, you are ensuring that the Application Gateway is distributed across multiple physically isolated datacenter locations within a single Azure region. This helps to increase availability and fault tolerance for your application.

Here’s a breakdown of how this configuration works:

Availability Zones: Azure regions are divided into Availability Zones, which are separate datacenter locations. Each Availability Zone has its own power, cooling, and networking infrastructure. By selecting Availability Zone 1, 2, and 3 during the creation of your Application Gateway, you’re distributing the gateway’s resources across these zones.

Application Gateway Instances: When you create an Application Gateway, multiple instances are automatically provisioned within the selected Availability Zones. These instances work together to provide high availability and redundancy for your gateway.

Load Balancing: Azure Load Balancer is used to distribute incoming traffic across the Application Gateway instances within the specified Availability Zones. The Load Balancer continuously monitors the health of the instances and automatically routes traffic to healthy instances, ensuring that your application remains accessible even if one or more instances or Availability Zones experience issues.

Redundancy and Resiliency: By deploying your Application Gateway across multiple Availability Zones, you’re introducing redundancy and resiliency into your infrastructure. If one Availability Zone becomes unavailable due to an outage or maintenance, the Application Gateway can continue to handle traffic through the remaining available zones.

Single frontend IP

In an Azure Application Gateway v2 deployment with multiple instances across different Availability Zones, you can use the same frontend IP regardless of which gateway instance is active or handling the traffic.

When you create an Application Gateway, you configure a frontend IP address that serves as the entry point for incoming traffic. This frontend IP remains consistent across the instances of the Application Gateway deployed in different Availability Zones.

Azure Load Balancer, which sits in front of the Application Gateway instances, automatically distributes incoming traffic across the active instances based on its load balancing algorithm. The Load Balancer ensures that traffic is routed to the appropriate active instance handling the traffic, regardless of which Availability Zone it resides in.

By utilizing the same frontend IP, you can seamlessly distribute and balance the incoming traffic across the active Application Gateway instances. This allows for high availability and load balancing capabilities while maintaining a consistent entry point for your application regardless of the specific gateway instance serving the traffic.

It’s important to note that Azure Load Balancer manages and handles the routing of traffic to the active instances based on their health and availability, ensuring a smooth experience for your application users.

Autoscaling

In Azure Application Gateway v2, the “autoscaling” option refers to the ability to automatically adjust the number of instances (gateways) based on traffic load. If you choose “no” for autoscaling, you will have a single gateway instance. However, if you choose “yes,” you can configure autoscaling to dynamically scale the number of instances based on traffic patterns.

When you enable autoscaling and specify a minimum and maximum number of instances (gateways) between x and y, the Application Gateway will automatically adjust the number of instances to handle incoming traffic. This provides flexibility and ensures that your application can dynamically scale up or down based on demand.

If traffic increases, the autoscaling feature can add additional instances to handle the higher load, and as traffic decreases, it can reduce the number of instances to optimize resource utilization and cost.

By leveraging autoscaling in Azure Application Gateway v2, you can ensure that your application has the capacity to handle varying levels of traffic without manual intervention. This allows for efficient resource utilization and provides a seamless experience for your application users during periods of high demand.

In Azure Application Gateway v2, autoscaling supports the use of Availability Zones. When you configure autoscaling with a range of 3-6 gateway instances and have three Availability Zones, you could potentially have up to 18 gateway instances in theory.

Here’s how this works:

Autoscaling Range: When you specify an autoscaling range of 3-6 gateway instances, it means that the Application Gateway can automatically scale the number of instances up to a maximum of 6 and down to a minimum of 3 based on traffic load.

Availability Zones: If you have three Availability Zones configured, each with its own set of resources, the Application Gateway can distribute the autoscaled instances across these zones. This means that it can deploy instances in each zone to ensure high availability and fault tolerance.

Theoretical Maximum: With autoscaling enabled and a range of 3-6 instances, and considering three Availability Zones, in theory, the Application Gateway could potentially scale up to 6 instances in each zone, resulting in a total of 18 instances across all three zones at maximum capacity.

By utilizing autoscaling with support for Availability Zones, you can ensure that the Application Gateway dynamically adjusts its capacity across multiple zones to handle varying levels of traffic. This provides high availability, fault tolerance, and efficient resource utilization for your application.

TLS/SSL termination

- Offloads the TLS/SSL termination at the AGW instead of the back-end pool server.

- Back-end pool servers also do not need the TLS/SSL certificate to be installed.

- If end-to-end encryption is required then AGW can re-encrypt the request prior to sending it onto the back-end pool server.

- The de-encryption is needed to the AGW can read the URL and collate it with the routing rule to forward the request onto the correct back-end pool server.

Health probes

- Determines which servers in the back-end pool are available to receive incoming requests.

- The AGW sends a request to the back-end pool server and if it receives an HTTP 200-399, the server is considered healthy.

Autoscaling

- Automatically scale the AGW up or down based on traffic.

- Removes the need to set a static instance count.

Network requirements

- An Azure VNet.

- Dedicated subnet.

- For scaling multiple Ips are required.

- Example, for four AGW instances (count) use a minimum /28 subnet (11 usable).

Private Application Gateway deployment (preview)

Register for preview

- Per subscription.

- Takes about 30 minutes to take effect.

Differences between AGW v1 and v2

| Feature | Application Gateway Standard_v1 | Application Gateway Standard_v2 |

|---|---|---|

| Architecture | Legacy | Next-generation |

| Load Balancing | Basic | Basic |

| Performance | Lower | Higher |

| Scalability | Limited | Improved |

| Availability | Limited | Zone redundancy supported |

| SSL Termination | Slower | Faster |

| Web Application Firewall | Basic | Improved |

| Autoscaling | Not supported | Supported |

| Public IP required? | No | Yes (unless using preview SKU) |

AGW Standard v1

https://learn.microsoft.com/en-us/azure/application-gateway/v1-retirement

- April 28 2026 AGW v1 will be retired.

- This means any AGW v1 in running status will be stopped and eventually deleted.

- From July 1 2023, any existing subscriptions without an existing AGW v1 will not be able to create a new AGW v1.

- From August 28 2024, all subscriptions will not support the creation of new AGW v1.

References

https://learn.microsoft.com/en-us/azure/application-gateway/

https://docs.microsoft.com/en-us/azure/application-gateway/overview

https://docs.microsoft.com/en-us/training/modules/intro-to-azure-application-gateway/

https://docs.microsoft.com/en-us/training/modules/load-balancing-https-traffic-azure/

https://docs.microsoft.com/en-us/training/modules/load-balance-web-traffic-with-application-gateway/

https://docs.microsoft.com/en-us/training/modules/end-to-end-encryption-with-app-gateway/

Feedback

Was this page helpful?

Glad to hear it!

Sorry to hear that.